Information theory

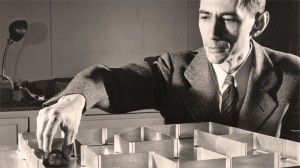

A mathematical theory of communication, by Claude Shannon (1948)

A mathematical theory of communication, by Claude Shannon (1948)

Uncertainty and information

Shannon's thoughts on communication...

- A message consists of some sequence of symbols generated by a 'source',

- transmitted through a 'channel' to a receiver.

- Is there a *most compact* way to encode a message?

- What if there's noise in the channel? What can be done so that the message still arrives, despite noise?

Uncertainty, Information, Probability

Which statement would be more *surprising*?

- I flipped a coin and it turned up heads!

- I rolled a dice, and a 3 came up!

What are the relative probabilities of the two events?

Which statement carries more/less *information*?

- I rolled out of bed today, and it was snowing!

- I rolled out of bed today, and the temperature outside was above absolute zero!

A source spits out characters...

01110010011000000101101...

CURTYWWG BNMMPTYUXXIICNWKEFVOVK SOFFDDDJKDSSCJCJFGMEWUX...

LITTLE RED RIDING RAN THROU....

How much uncertainty ($H$) is there about the next character in the sequence??

Summarizing Carter, 20.1-20.3:

The uncertainty, in units of "bits" (Binary digITS), of a set of possible outcomes with probabilities $\{p_1, p_2, p_3,....\}$ is $$H=-\sum_i p_i \log_2 p_i$$

This is also the amount of information contained in a report of which outcome actually occurred.

For a fair coin toss, the possible outcomes are H ($p_H$=1/2) or T ($p_T$=1/2). Therefore, the uncertainty in a single coin toss is $$\begineq H&=-(p_H\log_2 p_H+P_T\log_2 p_T)\\ &=-\left(\frac12\log_2 \frac12+\frac12\log_2 \frac12\right)=-\left(\frac 12(-1)+\frac 12(-1)\right)=1\text{bit}. \endeq$$ This means that you could report the outcome of a coin flip with a single binary digit: The outcome could be "0" or "1".

In what follows, you may find the change of base formula useful $$\log_2 x = \ln x / \ln 2.$$

Trying to make this plausible, calculate $H$ for a few possible-to-calculate situations:

- Fair coin tosses: Let's say that you toss a fair coin $3$ times...

H,T,T

How many "bits" are needed to report the outcome? - Toss a fair coin $n$ times...

H,T,T,H,T,H,H,H,T,T,H... - Certain events: Flip a two-headed coin, and you find a typical sequence to be...

T,T,T,T,T,T, [99 years with no change] , T,T,T,T.... What is the next symbol in the sequence? What is $H$? - One decimal digit: How many bits in a decimal digit?

- Eight-sided dice:

1,3,1,4,8,7,6,8,2,... - Loaded dice: with sides 1,2,3,2,4,2,8,2, should have *less* uncertainty than a fair dice, right??

- 26 letters plus space?

- Coins again: What is $H(x)$ for a coin with probability $x$ of H, and probability $1-x$ of T? [Graph]