Lagrange multipliers

How to find the maximum (or minimum) of...

- a function of many variables,

- subject to one or more constraints?

...a question that preoccupied Giuseppe Luigi Lagrancia a.k.a. Joseph-Louis Lagrange: A mathematician who was famous in his own lifetime.

...a question that preoccupied Giuseppe Luigi Lagrancia a.k.a. Joseph-Louis Lagrange: A mathematician who was famous in his own lifetime.

- Invited to Berlin by King Frederick of Prussia,

- Invited to Paris by King Louis XVI,

- Turned melancholy in Paris, only coming out of his funk as he gradually grew aware of, and then alarmed by the French Revolution taking place around him.

- Revolutionaries asked him to stay, despite a general expulsion of foreigners.

Extrema with constraints-- multivariate method

We'll find the maximum of a function $f$ of two independent variables, $f(x,y)$, subject to one constraint, and then generalize to (many) more than one constraint, and more than two variables.

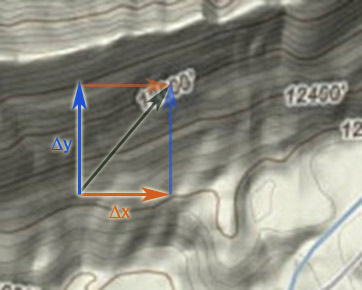

Here's the Calc III treatment of Lagrange multipliers in terms of paths in the mountains and gradients.

At the extremum, there should be no change in the function $f$ for small steps ($dx$, or $dy$) of any of the dependent variables. That is:

$$[df]_{ext} = \left[\frac{\partial f}{\partial x} dx + \frac{\partial f}{\partial y} dy\right]_{ext}

=0.$$

Using gradient terminology,

$$\myv \grad f\equiv

\frac{\partial f}{\partial x} \uv i + \frac{\partial f}{\partial y} \uv j $$

and

$$d\myv r = dx\, \uv i + dy\, \uv j$$

So

$$df=\myv \grad f \cdot d\myv r.$$

But in our problem $dx$ and $dy$ are not actually independent of each other. There's a constraint equation between $x$ and $y$, which we'll state abstractly as:

$$\phi(x,y) = {\rm const}.$$

$$\Rightarrow d\phi = \frac{\partial \phi}{\partial x} dx + \frac{\partial \phi}{\partial y} dy = d({\rm const}) =0.$$

Where...

$$d\phi=\myv \grad \phi \cdot d\myv r.$$

Solving each of the previous equations separately for the ratio $dy/dx$ and setting those equal, we find:

$$\frac{\partial f / \partial x}{\partial \phi / \partial x} =

\frac{\partial f / \partial y}{\partial \phi / \partial y} = -\alpha.$$

We simply call the common ratio '$-\alpha$', and we can write this now as two equations:

$$\frac{\partial f}{\partial x} +\alpha\frac{\partial \phi}{\partial x} = 0;\ \frac{\partial f}{\partial y} +\alpha\frac{\partial \phi}{\partial y} = 0.$$

We found that the gradients are parallel at an extremum, that is to say...

$$\myv \grad f =-\alpha \myv \grad \phi.$$

And this means the $x$- and $y$-components of the gradients have a common ratio of 1 to $-\alpha$.

The constraint:

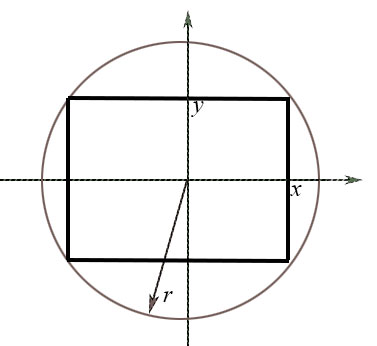

$$\phi(x,y)=x^2+y^2=r^2=2.$$

The solution: solve these 2 differential equations for a common constant $\alpha$:

$$\frac{\partial f}{\partial x} +\alpha\frac{\partial \phi}{\partial x} = 0;\ \frac{\partial f}{\partial y} +\alpha\frac{\partial \phi}{\partial y} = 0.$$

Do it! [We found that $\alpha=\pm 2$ which implies that either $x=y$ or $x=-y$. Either way, the figure that maximizes the area is a square with equal height and width: Since $x^2+y^2=2$, it looks like that height (or width) occurs at $|x|=1$. Each one of the equations above is a function of just one dependent variable. So, generalizing to a function of $n$ variables $f(x_1, x_2,...x_n)$ and one constraint equation $\phi(x_1,x_2,...)$:

$$\frac{\partial f}{\partial x_i} +\alpha\frac{\partial \phi}{\partial x_i} = 0,\ i=1,2....n.$$

Working things out for the case of a second constraint equation, $\eta(x_1,x_2,...x_n)=0$, we'd eventually find:

$$\frac{\partial f}{\partial x_i} +\alpha\frac{\partial \phi}{\partial x_i}

+\beta\frac{\partial \eta}{\partial x_i}

= 0,\ i=1,2....n.$$

$\alpha$ and $\beta$ are known as Lagrange multipliers.

In Carter's terminology

For landscapes, we said

$$\Delta f \approx \frac{\partial f}{\partial

x}\,\Delta x + \frac{\partial f}{\partial

y}\, \Delta y.$$

For landscapes, we said

$$\Delta f \approx \frac{\partial f}{\partial

x}\,\Delta x + \frac{\partial f}{\partial

y}\, \Delta y.$$

Example

Find the maximum area of a rectangle (the maximizing problem) that can be inscribed inside a circle of radius $r=\sqrt 2$ (the constraint)

Find the maximum area of a rectangle (the maximizing problem) that can be inscribed inside a circle of radius $r=\sqrt 2$ (the constraint)

The function to be maximized:

$$\text{Area}=f(x,y)=(2x)(2y)=4xy.$$

Hmmm, looking at this a little closer... Since we've defined Area=$4xy$, the area corresponding to $x=-y$ is a *negative* number. So we've actually found 1 maximum (at $x=y$) and one minimum (at $x=-y$) because for any $x$, there are two $y$ values possible, $y=\pm\sqrt{2-x^2}$ (using our constraint equation) and so the Area function can be expressed as a function of $x$ alone and plotted: $f=4*x*\sqrt{2-x^2}$ (plotted below). It is a double-valued function that looks like this (on WolframAlpha). So, really there are 4 solutions :->]

Notice that the maxima (or minima) all occur at $|x|=1$.